Hi, I'm Eric.

I’m an avid world traveler, photographer, software developer, and digital storyteller.

I help implement the Content Authenticity Initiative at Adobe.

Hi, I'm Eric.

I’m an avid world traveler, photographer, software developer, and digital storyteller.

I help implement the Content Authenticity Initiative at Adobe.

21 October 2025

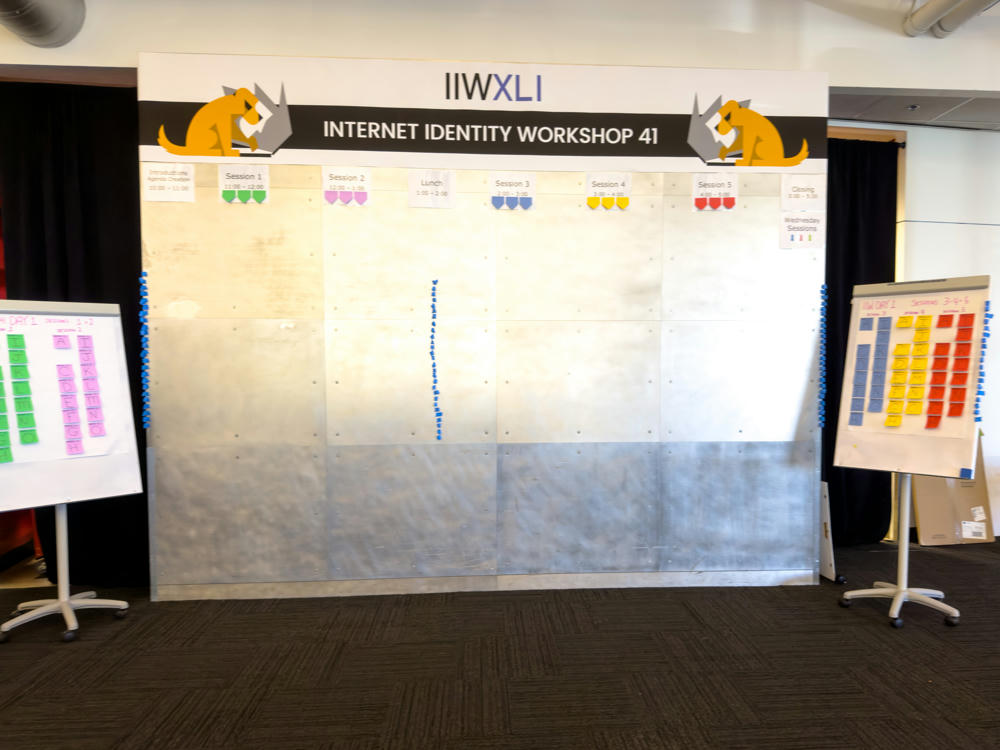

This week I’m attending the 41st biannual Internet Identity Workshop, which is one of the most valuable conferences I’ve encountered in any professional space. As the name might suggest, the topics are largely around how to express human and organizational identity in digital terms that respect privacy and security.

I’m part of a team at Adobe that is dedicated to helping content creators and content consumers establish genuine connections with each other. We do this through three organizations that we’ve helped to create:

Content Authenticity Initiative: CAI is a community of media and tech companies, NGOs, academics, and others working to promote adoption of an open industry standard for content authenticity and provenance. The CAI does outreach, advocacy, and education around these open standards. Content Authenticity Initiative is also the name of the business unit of which I’m a part at Adobe through which we participate in all three of these organizations, develop open source and open standards, and guide implementation within Adobe’s product and service suite.

Coalition for Content Provenance and Authenticity: C2PA is a technical standards organization which addresses the prevalence of misleading information online through the development of technical standards for certifying the source and history (or provenance) of media content.

Creator Assertions Working Group: CAWG builds upon the work of the C2PA by defining additional assertions that allow content creators to express individual and organizational identity and intent about their content. CAWG is a working group within the Decentralized Identity Foundation (DIF).

Last year, I published an article titled Content Authenticity 101, which explains these organizations and our motivations in more detail.

IIW is held at the lovely Computer History Museum, which recounts the formative years of our tech industry. CHM is located in Mountain View, California, right in the heart of Silicon Valley.

I’ll share a few photos of the venue and the conference. My non-technical friends might want to bow out after this section as it will rapidly descend into lots of deep geek speak.

IIW is conducted as an “unconference,” which means that there is a pre-defined structure to the conference, but not a predefined agenda.

There are several variations on how the agenda gets built at an unconference. In IIW’s case, there is an opening meeting on each morning in which people stand up and describe sessions they’d like to lead that day. Then there’s a mad rush to schedule these sessions (see photo below) and we all choose, in the moment, which sessions to attend.

You might think that not having a predefined agenda would mean that the topics that occur could be flimsy or weak or low in value. In practice, the opposite is true. Both times I’ve attended this conference so far, I’ve had to make very difficult choices about which sessions not to attend so I could attend something else which was also very compelling.

With that, here is my description of the sessions I’m attending this time around:

Drummond Reed, First Person Project

Reviewing version 1.1 of the First Person Project White Paper, just published yesterday. It’s 86 pages long. This talk is an overview of the major sections. Since it’s in the document, I won’t transcribe everything, but will instead focus on some highlights.

There’s also a slide deck if you prefer that format.

Fundamental goal: Establish a person-to-person trust layer for the internet.

Ability to impersonate real people and real situations with current generative AI tools has led to an accelerating degeneration of trust.

An essential part of this is to establish and facilitate person-to-person secure channels.

Reference out to personhood credentials paper.

Talk through how people have multiple personas that they might want to disclose selectively, for example:

Christopher Allen

This is a recap of the blog post and slide deck posted by Christopher at Musings of a Trust Architect: Five Anchors to Preserve Autonomy & Sovereignty.

A few years ago, Swiss Post Office proposed a digital ID. Referendum voted it down.

Government went back and reworked to address system to address feedback. This passed by a very narrow margin in a recent referendum. Result is based on SSI technology, but is completely government-implemented (i.e. centralized). This system is open in the sense that other information can be attached to government-issued digital identifiers; closed in the sense that only government can issue these credential.

Law says that digital identifiers are not required, but Chris is skeptical. The implementation will turn out to make physical identifiers into second-class citizens.

Swiss cultural concerns are largely about how the platform vendors (i.e. Android and iOS) will have an outsized ability to use data obtained through those credentials.

The TLS warning: Once you ship something, “good enough” becomes “stuck with it.” TLS 1.0 was ratified in 1999 with some known problems. Problems weren’t fixed until TLS 1.3 in 2019. (Gulp.)

Love this quote:

If a system cannot hear you say no, it was never built for us. It was built for them.

Chris describes this as “the least worst implementation” of a government-backed digital ID system.

Swiss ID system doesn’t have a well-established right to refuse participation.

So what can we do about it?

Eric Scouten, Adobe

Discussion followed my slide deck (PDF).

Andor Kesselman

Discussion followed Andor’s slide deck.

Started with a history of AI evolution.

Andor expects that thinking of agents as singular agents isn’t likely to remain common. Pressures are likely to lead to orchestration of agents working with each other, but that comes with increased risk of attack surface and error propagation.

Identity for AI agents is far more complex than human identity. For example: Where is the agent running? What version? What host OS? What compute center? What context did it have? What goals was it given?

Some people are now working on Know Your Agent (KYA).

Interesting question: Does DNS scale up sufficiently for agents, especially given their potentially short lifetimes?

As of yet, MCP servers aren’t really talking to each other. That will likely change soon and may substantially increase the attack surface vector.

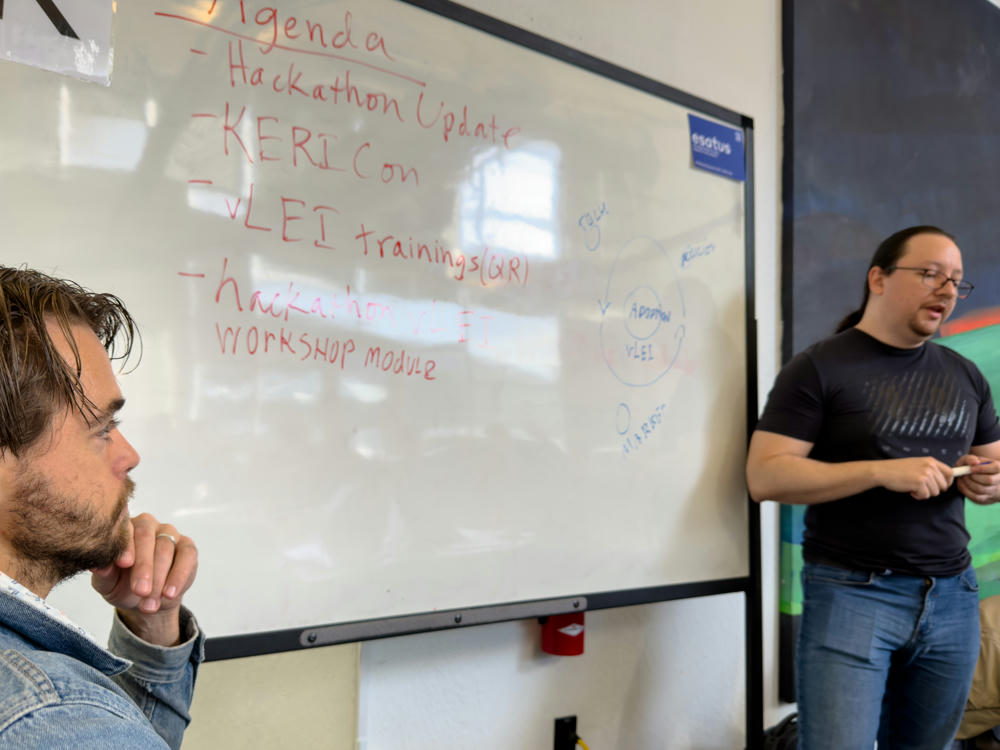

Esteban García and Kent Bull, GLEIF

GLEIF goal: Identity system for organizations at global scale.

To drive adoption, you need a “perfect storm” of things going right. GLEIF sees this as a flywheel building on the following components:

On technology specifically, GLEIF understands that developers need access to easily-onboarded technology. Focusing on developer-oriented training materials.

Hosting a global vLEI Hackathon. Intent is to promote awareness of the ecosystem and to encourage adoption/integration into existing commercial systems. Hackathon projects address these topics: finance, KYB, and supply chain. Over 100 submissions for initial round. Final presentations in next few weeks.

Be aware of vLEI training materials published by GLEIF.

KeriCON coming up: First KERI-only conference. April 2026 in Salt Lake City, just before IIW. Conference web site not yet available.

I asked a question about the ordering of the layer stack. When I reviewed KERI a couple years ago, I had trouble figuring this out. Kent provided the following sequence (top to bottom):

Karla extends an open invitation to attend the marketing and outreach sessions hosted by KERI Foundation.

Kent Bull, GLEIF

Working update on GLEIF-supported work to turn an ACDC into a W3C VC DM 1.1 or 2.0.

Fundamentally trying to solve the problem of making an ACDC credential resolvable by a client using W3C VC technology who doesn’t actually understand any of the KERI stack.

Is it possible to go the other way? tl;dr: No. IOW can a W3C VC be turned into a valid ACDC? No, because KERI has tighter security requirements than W3C. One example is the use of JSON LD contexts, which are vulnerable to schema malleability attacks. KERI doesn’t rely on any web infrastructure as part of its security posture.

Problem: A lot of the security libraries want access to private key material in order to sign.

Utah mentions that there is also work to make ACDCs available as mDLs as well. Proof of concept demonstration at the SEDI summit last week.

ACDC has introduced a new “cargo” field in the ACDC which represents a signed commitment to a particular serialization of (for example) a W3C VC credential, which then gets placed in the ACDC chain.

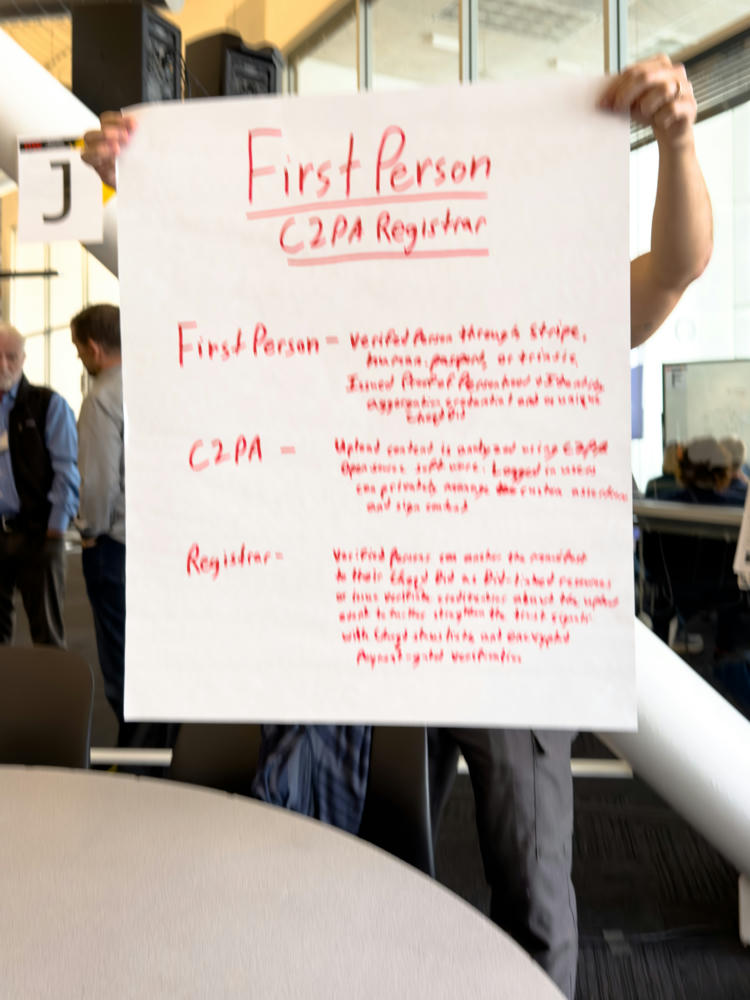

Luke Nispel, Origin Vault

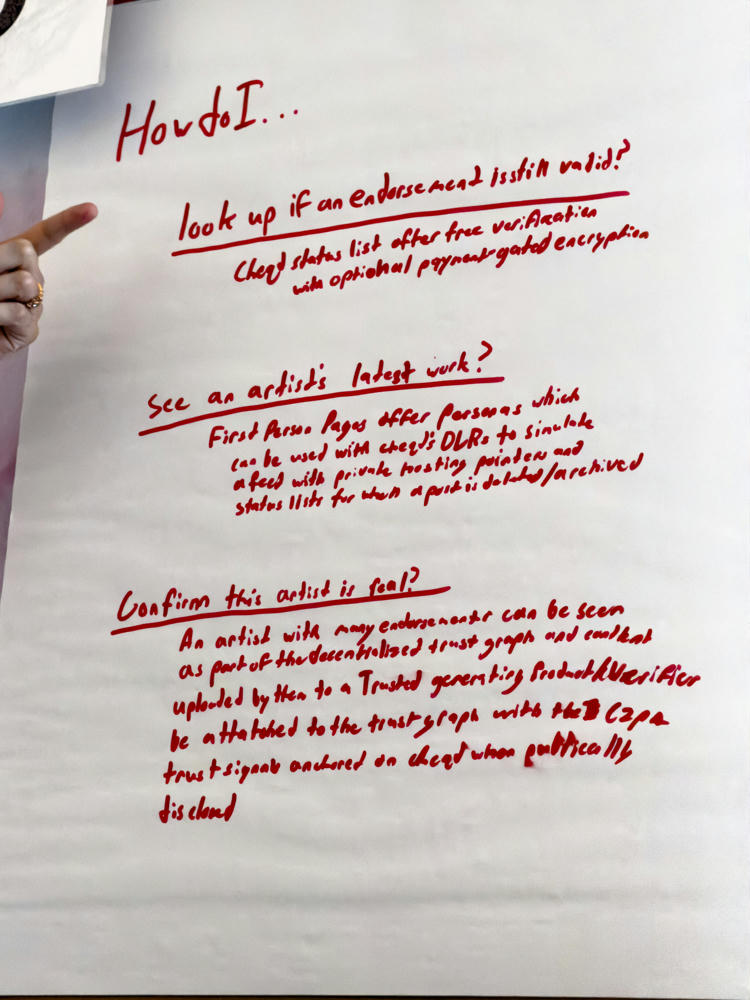

Goal: Create an endorsement network of artists that recognize each other.

Looking into how to use First Person credential, which has verified person infrastructure, to build an identity claims aggregator. Their ICA implementation allows Facebook, GitHub, Adobe, many other verified IDs. Then you can anchor things on the blockchain.

Have implemented their own C2PA verifier and signing infrastructure. Content uploaded to their service gets registered on blockchain. Registrar for verified persons on the Cheqd. Each Cheqd DID has a linked resources field which allows linkage to small information. Could point to URLs with additional information or C2PA Manifests hosted outside.

Purpose: Peer-to-peer artist network empowered with C2PA and Cheqd.

Interesting business model experiment: Artist can choose to charge the verifier to verify identity through this framework.

Heather Flanagan, Spherical Cow Consulting

Based on a recent blog post series from Heather titled The End of the Global Internet.

Compute resources (data centers), the materials needed to build computers (raw materials), etc., are not evenly distributed. Demographic evolution is going to push innovation to other parts of the world (especially Africa), but … most of the western world is aging.

What are the cultural values in Africa, where population is growing? Much more tribal. Do they think of privacy / community / consensus in the same way that we do? No. But those values are going to drive Internet culture as demographics change.

Trust frameworks are at best regional and generally not interoperable.

Upcoming post will talk about the implications for standards development. (Ooh, interesting!)

How will standards orgs handle the inevitable aging-out of current membership? New / younger members not joining at a replacement rate.

Besem Obenson

Besem working for UN for 18 years mostly in the Americas. UN has lost significant funding in 2025. How to prioritize services for displaced populations? Displaced = refugees, displaced migrants, internally-displaced, etc.

100MM+ people are forcibly displaced, including refugees, stateless people, asylum seekers, IDPs, and victims of human trafficking.

Many lack access to legally-backed identity, which means they may lose access to protection, justice, food, health care, and freedom of movement.

UNHCR manages 25MM+ records for verification, but system is not built for user control. Data misuse can endanger lives or expose people to further exploitation.

What does trust mean when the state or traffickers may both pose threats?

Besem has spoken with thousands of displaced people and also traffickers to understand what identity could look like for them.

How do you restore identity when you don’t have toehold of identity? Quite possible they are young enough that they don’t know their own names, birthplace, birthdate (even country of birth), parents, etc.

Can SSI frameworks include those who lack legal status, documentation, or digital safety?

What information can even be shared amongst UN agencies? UN agencies have a lot of power to assign and/or determine identity and thus decide what services are available.

Many people (e.g. refugees) lack legal status in the country where they are physically located. Some countries (Venezuela, Nicaragua) make it very difficult to obtain documentation.

Where is the ethical middle ground between protection, autonomy, and safety from exploitation?

Goal: Identify 2-3 actionable principles or prototype ideas to explore post-IIW.

What is the access to technology among displaced persons? Many adults have access to phones. Refugee welcome centers often offer fast charging and free wi-fi. This is helpful in regaining access to medical, educational history, etc.

(I had to step away after this; not sure what discussion followed.)

Eric Scouten and Andrew Dworschack

I did a speed run through the data model for CAWG’s identity assertion. See only slides starting with “Identity assertion in the C2PA data model” and ending with “Identity assertion / CBOR-DIAG example.”

These notes are available in the following locations:

In Andrew’s diagrams:

Phil Dustin, Kristina Yasuda, and Christian Bormann, SPRIN-D

Talking through implementation of EIDAS 2.0 which was passed into law in May 2024 and will be implemented in 2026. Lays the foundation for digital identity and trust (electronic signatures and time-stamping services) within the EU. Gives legal standing for qualified electronic signatures. EIDAS 1 (circa 2014) did not establish interoperability for digital identity.

In Germany, there is both an official government-sponsored wallet app, but it is possible for private enterprises to create their own. Other countries have very different strategies. There is an EU-wide reference implementation which Germany is building upon.

Demo of signing in with German ID card (which has NFC + PIN) and German ID wallet app. Back-end generates an SD-JWT and mDL that is stored in wallet.

Then demo of opening a bank account (demo bank, of course) using a presentation from the wallet app. Almost instant ID verification.

German law requires relying parties to register (and thus get a registration certificate) and to be transparent about the data that they’re requesting.

To become a relying party, must register with German wallet tool. Process yet to be defined. Will have an “ecosystem management portal,” but that doesn’t exist yet. Looking for serious RPs to do prototype engagements. May be difficult for non-European companies to gain access.

Interesting: The issuance and RP trust chains are still X.509 based. Only the individual wallet presentation is SD-JWT or mDoc based. Look for “Blueprint for the EUDI Wallet Ecosystem in Germany.” Also “Architecture Documentation for the German National EUDI Wallet.” Look at “PID Presentation.”

Subscribe to my free and occasional (never more than weekly) e-mail newsletter with my latest travel and other stories:

Or follow me on one or more of the socials: